In 2026, the competition in AI-assisted coding has reached a boiling point. The recently launched GPT-5.3 Codex played a role in its own development, which is impressive. OpenAI used early versions to debug training runs and manage deployment systems. This kind of self-improving process goes to show how advanced this technology has become. Gemini 3.0 Pro made its mark earlier, in November 2025, by leading on major benchmarks. But which one is better for coding? And which one offers the better bang for your buck? Let’s take a closer look at that in this GPT-5.3 Codex vs Gemini 3.0 Pro Comparison.

GPT-5.3 Codex vs Gemini 3.0 Pro (Brief Overview)

Both models pitch themselves as more than just code writers. They want to be coding agents, not autocomplete tools on steroids.

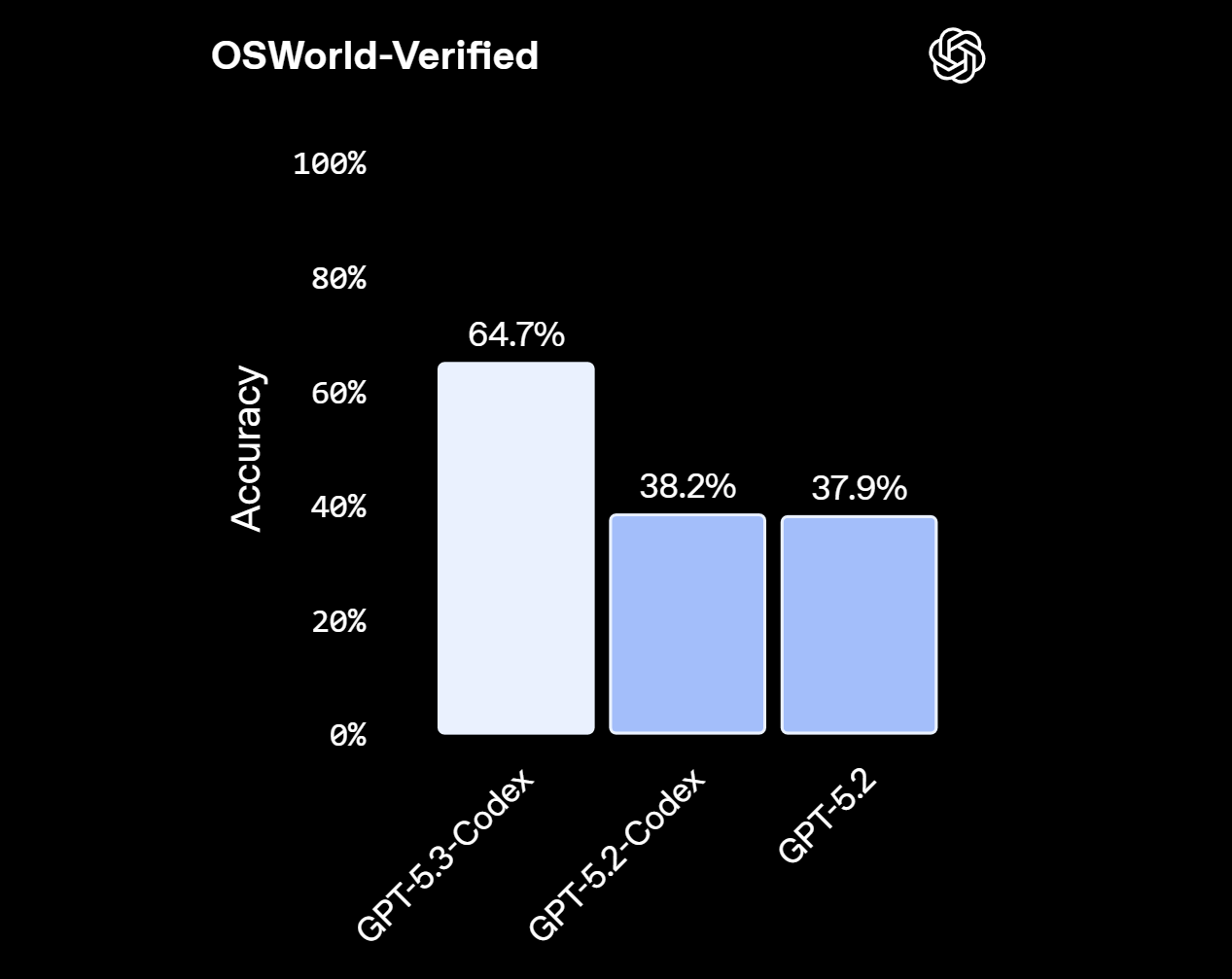

GPT-5.3 Codex is OpenAI’s most capable agentic coding model to date. It merges the frontier coding performance of GPT-5.2-Codex with the broader reasoning abilities of GPT-5.2 into one unified system. The result runs 25% faster than its predecessor, achieves its results with fewer tokens, and can steer itself across long, multi-step engineering tasks without losing context. OpenAI describes the transition bluntly: Codex goes from an agent that can write and review code to one that can do nearly anything developers and professionals can do on a computer.

Gemini 3.0 Pro, on the other hand, arrived as Google’s most intelligent model ever built. It was designed from the ground up to excel at what Google calls “vibe coding” and agentic coding simultaneously. It topped the WebDev Arena leaderboard at 1,487 Elo on launch day, and independent AI benchmarking organization Artificial Analysis crowned it the global leader in AI intelligence at the time, marking the first time Google had ever held that position.

These two models are heading in the same direction. They just come from very different starting points.

GPT-5.3 Codex vs Gemini 3.0 Pro – Benchmark Comparison

Before diving into what developers actually experience, here is what the official and third-party benchmarks say:

| </> | GPT-5.3 Codex | Gemini 3.0 Pro |

| SWE-Bench Verified | 77.9% | 76.2% |

| Terminal-Bench 2.0 | 77.3% | 54.2% |

| LiveCodeBench Pro (Elo) | ~2,240 | 2,439 |

| AIME 2025 (w/ code exec) | 100% | 100% |

Reading this table straight is tricky because the models were not always tested on identical benchmarks. SWE-Bench Verified and SWE-Bench Pro are related but not the same test. Gemini 3.0 Pro’s 76.2% on SWE-Bench Verified is a strong number, and it was achieved on a benchmark that specifically tests autonomous, agent-driven code fixes. GPT-5.3 Codex’s 56.8% on SWE-Bench Pro is on a harder, more contamination-resistant version of the test spanning four programming languages. These are not directly apples-to-apples, and that distinction matters when evaluating the hype.

Terminal-Bench 2.0 tells a cleaner story. GPT-5.3 Codex’s 77.3% is a commanding lead over Gemini 3.0 Pro’s 54.2%. This benchmark measures real terminal skills, the kind of low-level command execution that coding agents rely on when doing autonomous work. For developers building CLI tools, DevOps pipelines, or anything involving shell scripting, this gap is meaningful.

What Developers Are Actually Experiencing

Benchmarks set expectations. Real development sessions expose the truth.

In practical hands-on tests run by the developer community, a few consistent patterns have emerged:

Where GPT-5.3 Codex stands out:

- Long, multi-step engineering tasks with high token efficiency

- Terminal-driven and DevOps-style workflows

- Consistent behavior across complex, multi-file codebases

- Real-time collaboration mid-task without losing context

- Stronger performance on competitive algorithm problems (e.g., LeetCode Hard)

Where Gemini 3.0 Pro stands out:

- Frontend and UI generation (repeatedly the top performer in vibe-coding tests)

- Agent-style workflows that follow documentation closely

- Code that is lean, modular, and low in cyclomatic complexity

- Projects requiring the full 1 million token context window

- Integration into Google’s ecosystem (Vertex AI, Antigravity, AI Studio)

Independent testing from Composio found that Gemini 3.0 Pro handled UI builds and agent workflows more cleanly than earlier GPT models, requiring far fewer follow-ups. However, the same testers noted that on algorithm-heavy problems, GPT-5.2-Codex (the predecessor to 5.3) produced correct solutions more reliably. With GPT-5.3 Codex being 25% faster and more token-efficient, that trend is likely to hold or widen.

A separate benchmark run from Tensorlake’s coding challenges concluded that GPT-5.2 delivered more polished, production-ready code with less cleanup needed compared to Gemini 3.0 Pro, which tended to implement core logic correctly but leave out edge cases, customization options, and usability features.

GPT-5.3 Codex vs Gemini 3.0 Pro – Context Windows and Token Efficiency

This is a major practical differentiator.

Gemini 3.0 Pro ships with a 1 million token context window and 64,000 max output tokens. That is enormous. If you are working on a large legacy codebase, analyzing years of logs, or building a system that needs to hold an entire architecture in context, Gemini 3.0 Pro has a structural advantage. GPT-5.3 Codex offers a solid but smaller context window in comparison.

That said, GPT-5.3 Codex achieves its benchmark results using fewer tokens than any prior Codex model. This is not a trivial achievement. For teams operating on API budgets, token efficiency translates directly to cost efficiency. If a model hits 95% of the quality at 70% of the token cost, that compound advantage matters over thousands of developer hours.

The practical recommendation here: if your work routinely involves processing massive codebases in a single pass, Gemini 3.0 Pro’s million-token window is genuinely valuable. For most everyday coding workflows, GPT-5.3 Codex’s efficiency advantage tips the scales.

GPT-5.3 Codex vs Gemini 3.0 Pro – Pricing and Access

| $$ | GPT-5.3 Codex | Gemini 3.0 Pro |

| Input (per 1M tokens) | $1.75 | $2.00 |

| Output (per 1M tokens) | $14.00 | $10.00 |

| Context Window | 400K tokens pricepertoken+1 | 1M+ tokens |

| Minimum Subscription | ChatGPT Pro ($200/mo) | Vertex AI tiered plans |

| Tiered Discounts | Available >200K prompts | Free tier ≤1,500 searches |

Access and pricing affect which model you can actually use day-to-day.

Here is the current state as of February 2026:

- GPT-5.3 Codex: Available to all paid ChatGPT users (Plus, Pro, Business, Enterprise). Accessible via the Codex app, CLI, IDE extension, and web. API access was still rolling out at launch time.

- Gemini 3.0 Pro: Available via the Gemini app, Google AI Studio, Vertex AI, Gemini CLI, and the Google Antigravity platform. API pricing is listed at $2 per million input tokens and $12 per million output tokens for prompts under 200k tokens, with higher rates for larger context usage.

- Third-party integrations: Gemini 3.0 Pro is already embedded in Cursor, GitHub, JetBrains, Replit, and more. GPT-5.3 Codex’s broader ecosystem integration is expanding but slightly behind on third-party tooling.

For developers already inside the Google ecosystem, Gemini 3.0 Pro’s integrations offer a frictionless experience. For teams already using ChatGPT or OpenAI’s API stack, GPT-5.3 Codex fits naturally into existing workflows.

Which One is More Secure?

GPT-5.3 Codex is the first model OpenAI has classified as “High capability” under its Preparedness Framework for cybersecurity-related tasks. It is the first Codex model specifically trained to identify software vulnerabilities, and it ships with a comprehensive safety stack including automated monitoring, a Trusted Access for Cyber program, and a $10 million commitment in API credits toward cyber defense for open-source projects.

This cuts both ways. It means GPT-5.3 Codex is genuinely more powerful for security research. It also means some elevated-risk requests are automatically routed to an earlier model version. For developers doing legitimate security work, OpenAI has set up a path for full access through its Trusted Access program.

Gemini 3.0 Pro does not carry the same “High capability” cybersecurity classification, which means fewer guardrails but also fewer automatic rerouting events for security-adjacent code.

GPT-5.3 Codex vs Gemini 3.0 Pro – Verdict by Use Case

Picking a winner depends entirely on what you are building. Here is a breakdown:

Choose GPT-5.3 Codex if you:

- Work heavily in terminal and shell environments.

- Need an AI agent that maintains context across very long, autonomous sessions.

- Are you doing multi-language, multi-file software engineering

- Prioritize token efficiency for API cost management.

- Need enterprise security certifications (SOC 2 Type 2 compliance)

- Work on algorithmic or logic-heavy problems

Choose Gemini 3.0 Pro if you:

- Do a lot of frontend and UI development.

- Need a 1 million token context window for massive codebases.

- Are building within Google’s ecosystem (Vertex AI, Google Cloud, Antigravity)

- Prefer native multimodal input (analyzing UI screenshots, error images, video walkthroughs)

- Want the best tool for agent-style workflows that follow documentation precisely.

Consider both if you:

- Manage a team of developers with varied workflows.

- Are doing mixed frontend-backend work where no single model dominates

- Have the budget to use each model where it excels.

Independent analysis from Vertu suggests that combining Gemini 3.0 Pro with a GPT-5-series Codex model costs 50 to 100 percent more but can deliver 40 to 60 percent better results across diverse task types, making the multi-model approach worth it for professional development teams.

What Sam Altman Said (And Why It Matters)

Shortly after the GPT-5.3 Codex launch, OpenAI CEO Sam Altman posted on X: “It was amazing to watch how much faster we were able to ship 5.3-Codex by using 5.3-Codex, and for sure this is a sign of things to come.”

This is not just a marketing quote. It describes a feedback loop. The model was used to accelerate its own development, debug training runs, and manage deployment. If this recursive development cycle continues, the next generation of Codex models may arrive significantly faster and with compounding capability improvements.

Gemini 3.0 Pro’s strength, meanwhile, is the breadth of Google’s infrastructure behind it. The model was processing over 1 trillion tokens per day on Google’s API within a month of launch. That kind of scale gives Google an enormous feedback signal for future improvements.

Both companies are moving fast. Neither model is going to look the same in six months.

Wrapping Up

The most useful thing to understand is that there is no clean, single answer to whether GPT-5.3 Codex or Gemini 3.0 Pro is better for coding. GPT-5.3 Codex wins on terminal-based workflows, token efficiency, and long-horizon autonomous coding sessions. Gemini 3.0 Pro wins on UI generation, massive context windows, and Google ecosystem integration. For most backend engineers and DevOps-heavy teams, GPT-5.3 Codex is the sharper tool. For frontend developers, full-stack builders working in large codebases, or teams already living in Google’s infrastructure, Gemini 3.0 Pro is the smarter pick. If the budget allows, using both strategically is the approach that the data consistently supports.